Building a Culture of Optimization, Part 2: Good Test Designs

In part 2 of this 5-part blog series about ‘Building a Culture of Optimization’ I’m going to talk about the importance of teaching good test designs. You can see part 1 about educating the basics here.

Part 2: Good Test Designs

You can’t have a good test without a good test design.

One of the first things I do when a new test idea surfaces is sit down with the key stakeholders & test proposers to understand the details of what they’d like to test. We’ll talk through the variables that are going to be tested, how best to setup & design the test, and ensure we are on the same page in terms of potential test outcomes and how to ensure we are testing in a clean and consistent manner.

To start, share the knowledge in terms of what type of test is appropriate to meet the need:

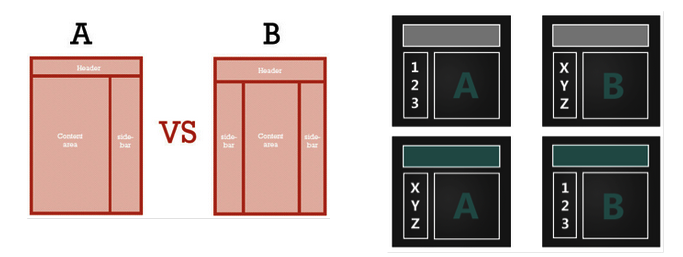

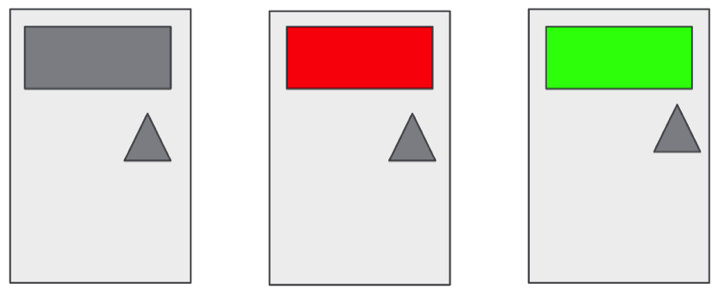

A/B test vs Multivariate test

A/B/n test: Compares two (or more) different versions

Multivariate test (MVT): Testing variations of multiple elements in one test

In the below example, the A/B test on the left is looking at a 2 column vs 3 column layout while keeping all other variables consistent. The MVT test on the right is looking at 2 different elements in combination – which color banner works best and whether numbers or letters on the side column perform better.

Next, ensure your team understands the basic test inputs: what makes a control versus a test variation.

Control: the current state of your website – no changes, should be the same as what is live to your traffic during the time of the test.

Variable: the element on the site you want to change. For example, button color would be one variable.

Variation: each test variation should change an isolated number of test variables. For example, test variation 1 could have a blue button while the control has a green button. Everything else should remain constant so you can isolate the impact of the one variable you are testing.

Note: when designing a test I always recommend having a static control rather than relying on the untested portion of your traffic for comparison. This is important for a couple of reasons: 1) you’ll be able to easily compare numbers because each variation & control will get the same percentage of traffic, and 2) because you’ll be able to control (and share evenly) the impact of outside sources on your test variations & control variation. Trust me on this one rather than learn the hard way (I learned the hard way… wasn’t pretty trying to explain to senior stakeholders why I had to throw out the results of what they thought was a perfectly fine test).

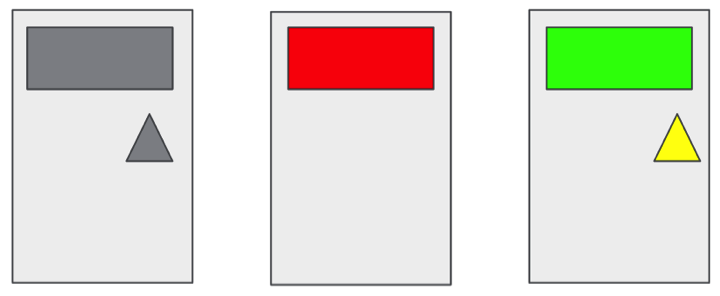

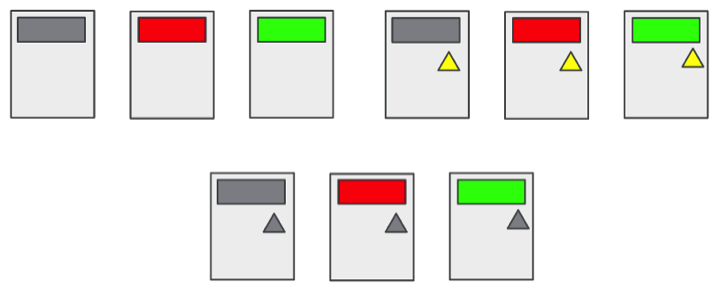

A look at good vs bad test designs

Bad test design:  Why is this a bad test design? Too many variables are being tested: This test mixes banner color, placement of a button, and button color. Here’s a couple of better test designs for these same variables:

Why is this a bad test design? Too many variables are being tested: This test mixes banner color, placement of a button, and button color. Here’s a couple of better test designs for these same variables:

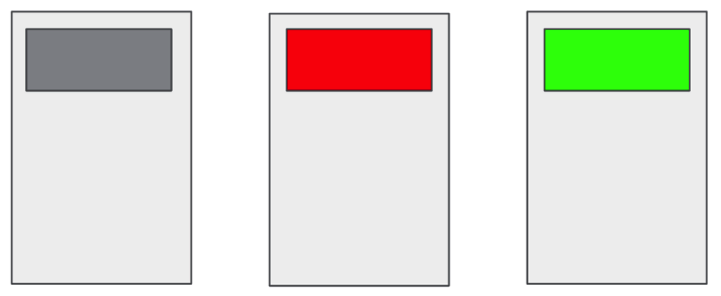

Test 1:  Test 2:

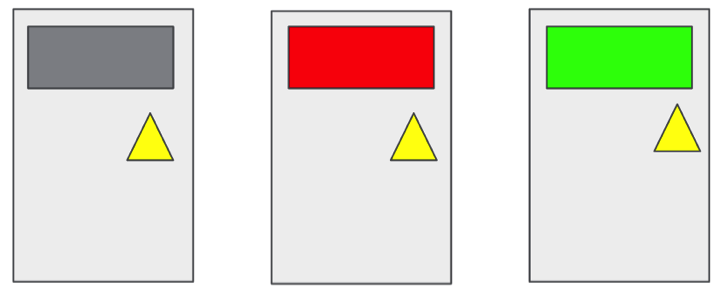

Test 2:  Test 3:

Test 3:  You could also test this as one massive A/B/n test or use some stats and a matrix calculator to come up with the necessary variations for a Multi-variate test:

You could also test this as one massive A/B/n test or use some stats and a matrix calculator to come up with the necessary variations for a Multi-variate test:

So why is this all important?

Simple – without a good test design you will not be able to report (with confidence) that what you are testing is any better or worse than what you currently have. Further, if you were to implement the results of a test with a bad design (one that did not control for variables) you may find very different results than what you anticipated due to there being too many variables at play. Finally, if you do find a large win with a bad test design (ex. one with too many variables) you may not be able to tease out what variable truly caused the positive impact. This could impact your ability to further optimize these variables down the road.

That’s it, good test design in a few simple steps:

1. Choose the right kind of test, A/B/n vs MVT

2. Create test variations to isolate the elements you want to test

3. Ensure you have a control and variations with a controlled number of variables

Part 3 of this 5-part blog series on ‘Building a Culture of Optimization’ will focus on the math, stay tuned!

Happy testing!

Pingback: Building a Culture of Optimization, Part 3: Know the Math | Marketing Insights

Pingback: Building a Culture of Optimization, Part 4: Evangelize the Process | Marketing Insights

Pingback: Building a Culture of Optimization, Part 5: So You’ve Found a Big Win… Now What? | Marketing Insights

vishwanath sreeraman

I attended your DAA LA session in Feb. and and am glad to see this topic in your blog as well. Thanks! In the past 6 months i have been actively involved in Testing and Optimization, and find a lot of common threads in terms of learning from mistakes.

I also find it interesting that you are able to run a lot of MVT tests. We find it logistically infeasible to run MVTs since the UX/design has to come up with a whole bunch of different designs to be tested simultaneously. As a result, we mostly end up doing A/B; A/B/C, or 2*2 testing (closest we come to MVT).

Also, we mostly expose our tests to close to 100% of the traffic, unless we see drastic changes to user behavior in the first few hours of activating the campaign. Do you see any pitfalls to that? Any bad experiences with exposing it to the whole traffic? I am trying to come up with a list of best practices for our testing process, and your insights will be welcome.

Thanks

vishwanath sreeraman

Pingback: Digital Debrief – A/B Testing Dried Mangoes for Fun and Learning