Testing with a Rapid Optimization Plan

If you’ve ever set out to A/B test a whole site redesign, you must have come across the question of ‘What do we do if the new site, that we’ve spent so much time and money on, doesn’t win?’

That’s a fair question. A very fair one. In fact, if you are not asking yourself that question before starting down the road of testing a site redesign, you should reevaluate your testing plan, because it’s a very real possibility that the new site will not, in fact, perform better than the old one.

That could happen for many reasons:

- users are used to your old site, and seeing a new one may be a jarring or disorienting experience to them

- you may have optimized the heck out of the old one already, and it is performing at it’s peak when you test the new one against it

- the new site may have different actions or KPIs that you are driving towards, and your testing goals need to be updated to reflect that

- and many more specific reasons…

A while back I wrote a post about Data-Driven Design, in which I detailed how in the course of 2 years we went from an old, outdated website to a shiny new one that performed on par or better than our highly optimized old site. What I didn’t go into detail on in that post was how initially, the new site didn’t perform as well. After we’d launched it and realized that conversions were down, I set in place what I called a ‘Rapid Optimization Plan’ (ROP for short).

So what is a ‘Rapid Optimization Plan’?

I made it up. Who knows if someone else has created something similar (I’m sure they have) or named it some variation on ROP (perhaps). What it meant to my org, however, was the following:

Step 1: Analysis

Analyze what CTAs were performing on par/better than old site. What CTAs were underperforming? What content was being engaged with? Was the navigation seemingly intuitive? Were there elements on the new site that may have caused friction in the user experience? Analyze the qualitative data – what were users telling us they liked and didn’t? In short, I used every ounce of analytics data available to me at the time to *quickly* figure out what had gone wrong and where.

Step 2: Design based on Data

Next I used the information I gathered in step 1 to make recommendations for follow up tests that we could quickly launch with the goal of bring underperforming CTAs back up to par with the old site. In the case of the Google Apps for Work website, this included reverting to a bright green button color (Marketing had really liked the new cool blue, but it clearly didn’t stand out as much), adding back color and imagery to the pricing page, and improving a few simple navigation and animation elements for discovery and click-thru. I designed these as 3 single page tests (to launch at the same time, attacking different elements on different pages to be analyzed both separately and together) so that we could move quickly and try to get the site performing better from multiple angles.

Side note: I’m not usually a fan of button color testing, because we can do so much more (bigger, deeper, more impactful user experience type tests), but in this case it was warranted by the data we had.

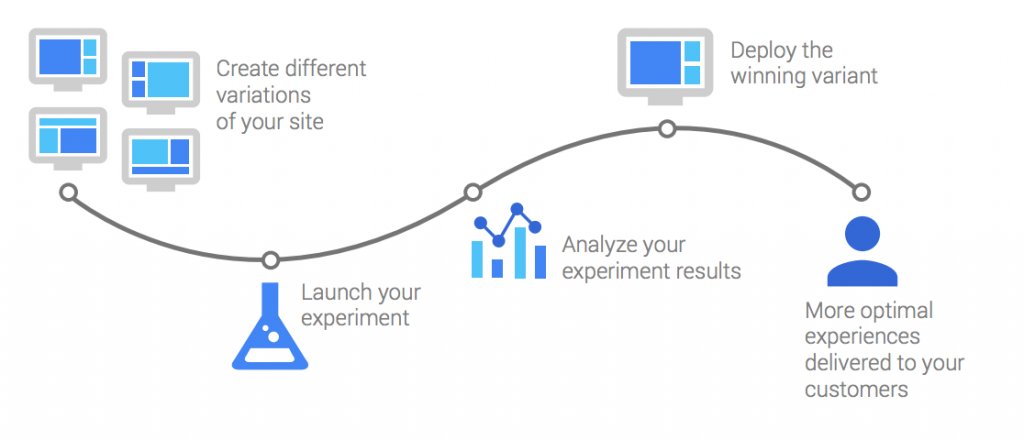

Step 3: Test, Analyze, Rinse, Repeat

Once the tests were designed and approved, we quickly set them in motion and watched them for a solid 2 weeks to ensure we were accounting for week over week fluctuations. We also launched a follow up qualitative survey via Google Consumer Surveys (GCS) to ensure that we were capturing user action and feedback from more than one angle.

The results were promising. Not only did we see improvement across all the pain point areas, but there was enough overall improvement to make those changes permanent and launch the new and improved site to 100% of our traffic. Success!

Step 4: Back to the drawing board – Testing is never done!

Once we had successfully launched the new site to 100%, we set out on our normal test ideation and prioritization process to begin A/B testing the new site for more continued improvements.

Takeaways:

The Rapid Optimization Plan (the ROP) had a tremendous impact on the overall business strategy when we were launching the new website. When things didn’t go quite as we’d hoped the first time around, this plan gave the organization a light to look towards. It was a detailed analysis, a plan of action, and a what-if scenario safeguard that allowed us to quickly press on and focus on optimization rather than worry about negative business impact. The ROP helped us to quickly identify the areas that were underperforming and launch several follow up tests which ultimately led us to launching a new, optimized, high performing website for the business.

Want an example ROP template? Here you go.

Michael Brown

First, thanks Krista for the read. Most customers expect instant results when a new site launches. Every new launch fluctuates in results. I think it is very important to reiterate to the customer why a site might under perform before and after the site has launched. The most difficult thing, I have found, is determining how long that period of time is (after launch) and when to expect improved results. What are your thoughts based on your experience?

Krista

Hi Michael, thanks for your comment. I think it really depends on the business, the goals, the recency/frequency effect a site may see due to new vs returning users, etc. For the GafW business, we used that 30 day trial period as our light post and aimed for flat to positive results after 30 days. Each business has to determine what makes the most sense for them and their bottom line.

Henrik Baecklund

Hi Krista! Thank you for an inspiring post!

On step 3 you mention that in your method you let the test run for 2 weeks, but how do you determine a winner? Would love to hear more on that topic!

Krista

It would depend on the platform being used for testing. Some will do the math for you, others you’ll need to plug the data into a statistical significance calculator. Then you ensure your results align with the business objectives and call a winner if the data permits.